Stand up for the facts!

Our only agenda is to publish the truth so you can be an informed participant in democracy.

We need your help.

I would like to contribute

(Shutterstock)

If Your Time is short

-

Facebook pledged to ban certain claims about coronavirus vaccines in December. It wasn’t comprehensive; the ban was supposed to address claims “debunked by public health experts” as well as claims centered on false ingredients and dire health outcomes.

-

Despite widening the scope of targeted claims, Facebook posts questioning the safety of vaccination continue to proliferate.

In the early days of the pandemic, a Facebook page for Earthley promised an easy way to avoid getting COVID-19. The wellness company promoted a Vitamin D cream and an elderberry elixir to strengthen the body’s immune response and "help fight off the possibility of you or your family getting the coronavirus."

The post appeared to violate Facebook’s policy for false claims of coronavirus treatments and cures. It was serious enough that the Federal Trade Commission mentioned it in a warning letter amid the agency’s crackdown on coronavirus scams.

Nearly one year later, Earthley is still spreading false information about COVID-19 and the vaccines — despite Facebook’s new rules against that content.

The page has published out-of-context news stories about vaccine side effects and skirted moderation by misspelling the word "vaccine" in posts questioning whether vaccination is safe. We reached out to Earthley for a comment, but we haven’t heard back.

"It doesn’t take an advanced, opaque algorithm like Facebook likes to use to predict this behavior," said John Gregory, deputy health editor at NewsGuard, a firm that tracks online misinformation. "The pages we’ve seen spreading these myths … they are pages that have been spreading health misinformation in most cases for years."

Facebook’s strategy for tamping down misinformation about vaccines is to single out certain categories of anti-vaccine claims, rather than taking a broad approach. The result is that many types of false or exaggerated claims against vaccines are allowed to remain on the platform.

As Facebook faces criticism from both parties in Congress, it is reluctant to take additional action that could be seen as a violation of free speech.

"People often say things that aren’t verifiably true, but that speak to their lived experiences, and I think we have to be careful restricting that," CEO Mark Zuckerberg said during a March 25 House hearing.

From the outset of the pandemic, Facebook promised a tough approach to coronavirus misinformation — one that didn’t solely rely on findings from its third-party fact-checking partners, including PolitiFact.

Facebook issued a broad prohibition in January 2020 on false claims or conspiracy theories about the coronavirus "that could cause harm to people who believe them."

As vaccinations began in the U.S. in December, the company announced an additional ban on coronavirus vaccine claims "that have been debunked by public health experts." Almost two months later, the company pledged to take down more specific claims. The full list includes posts that say the vaccines "kill or seriously harm people" or include "anything not on the vaccine ingredient list."

Facebook says it has removed millions of posts that violate those policies since December, "including 2 million since February alone," spokesperson Kevin McAlister told PolitiFact.

The company uses artificial intelligence to find false claims about the coronavirus and track copies of those claims when they are shared, wrote Guy Rosen, Facebook’s vice president of integrity, in a March 22 blog post. "As a result, we’ve removed more than 12 million pieces of content about COVID-19 and vaccines."

But Americans who logged on to Facebook and Instagram, its sister platform, over the past few months may still have seen posts that appear to violate those terms. The posts include:

-

The COVID-19 vaccine could lead to prion diseases, Alzheimer’s, ALS and other neurodegenerative diseases. (Pants on Fire)

-

"If you take the vaccine, you'll be enrolled in a pharmacovigilance tracking system." (False)

-

There are nanoparticles in the COVID-19 vaccine that will help people "locate you" via 5G networks. (Pants on Fire)

PolitiFact fact-checked those posts as part of its partnership with Facebook to combat false news and misinformation. When we rate a post as false or misleading, Facebook reduces its reach in the News Feed and alerts users who shared it. Unless the posts violate other policies, they remain on the platform. (Read more about our partnership with Facebook.)

PolitiFact is one of 10 news organizations in the U.S. alone that fact-check false and misleading claims on Facebook, a program that began in December 2016. Facebook’s moderation policies, including those that prohibit misinformation about COVID-19 vaccines, are independent of its fact-checking program.

Facebook’s rules against COVID-19 vaccine misinformation were created to address the most extreme cases of misinformation: claims that the vaccine kills you, causes autism or infertility, changes your DNA or "turns you into a monkey." After we sent Facebook the posts we found about a "pharmacovigilance tracking system" and 5G nanoparticles, the company removed them for violating its policies against COVID-19 and vaccine misinformation.

But that kind of enforcement has been uneven.

"Our enforcement isn’t perfect, which is why we’re always improving it while also working with outside experts to make sure that our policies remain in the right place," McAlister said.

Some of the most popular anti-vaccine posts are first-person testimonials about purported side effects.

In December, when vaccines from Pfizer-BioNTech and Moderna were approved for emergency use, videos emerged on Facebook claiming to show a nurse passing out after getting a shot (even though she gets dizzy with any kind of pain) and a nurse saying she developed Bell’s Palsy (even though there was no record of such a reaction in Tennessee). Other users have made unproven claims that the vaccines caused them to shake and convulse. (Public health officials say there’s no evidence to suggest such a connection.)

Those viral videos surfaced through Facebook’s fact-checking partnership with PolitiFact. The effect of a negative rating is a demotion in News Feed and in some cases a warning label. Although Facebook doesn’t allow posts that falsely claim the COVID-19 vaccines "kill or seriously harm people," first-person testimonials are allowed to live on the platform because they are "personal experiences or anecdotes."

"The goal of this policy is to reduce health harm to people, while also allowing people to discuss, debate and share their personal experiences, opinions and news related to the COVID-19 pandemic," Facebook says.

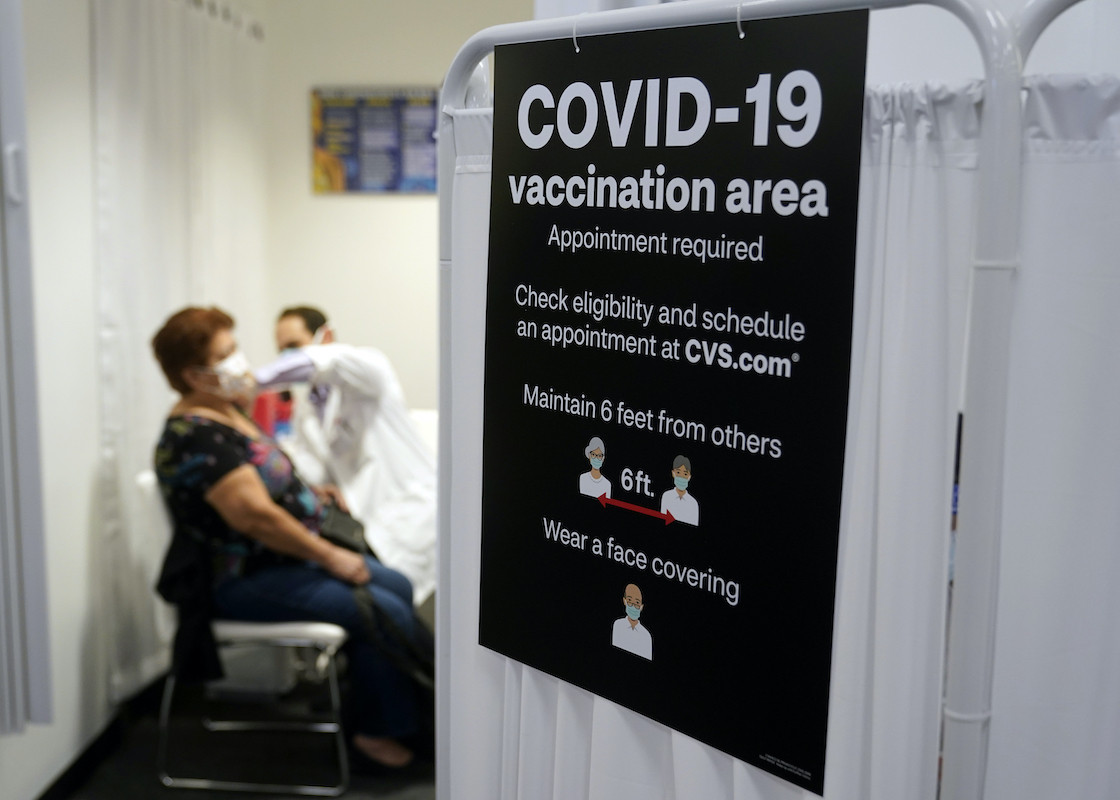

In this March 1, 2021, file photo, a patient receives a shot of the Moderna COVID-19 vaccine at a CVS Pharmacy branch in Los Angeles. (AP)

Experts worry about bad actors abusing the system.

"Being comprehensive about what does violate and what doesn’t violate (Facebook’s policy) is a strategy, but it can be something that’s easily worked around," said Kolina Koltai, a postdoctoral fellow at the University of Washington who studies anti-vaccine misinformation. "Other platforms have very simple policies that are very stringent about their moderation."

One example is Pinterest, which bans "anti-vaccination advice" and "misinformation about public health or safety emergencies." The company doesn’t have a set list of anti-vaccine claims that are against the rules, so Pinterest can be flexible about which posts it removes.

Other social media companies use policies in between the anti-misinformation policies of Facebook and Pinterest. Twitter prohibits tweets that "advance harmful false or misleading narratives about COVID-19 vaccinations" and has said it removed 8,400 posts since December. Over the past six months, YouTube said it has removed more than 30,000 videos with misleading or false claims about the vaccines.

Those numbers are a fraction of the millions of posts Facebook says it has removed for violating its COVID-19 misinformation rules. In his blog post, Rosen said that Facebook has "over 35,000 people working on these challenges," an apparent reference to the number of employees who work on the platform’s "safety and security," which also include hate speech and harassment.

But with about 2.8 billion monthly users, Facebook is the most-used social media platform in the world and the biggest vector for misinformation about vaccines.

An analysis from First Draft, a nonprofit that studies online misinformation, found that at least 3,200 posts containing claims explicitly banned by Facebook were published on the platform between February and March, amassing thousands of likes, shares and comments.

"The approach Facebook really should take is looking at the history of these sources and these pages — and using that in order to inform their policies, rather than the kind of whack-a-mole approach later on," Gregory said. "If the pandemic has proven anything, it’s that people who promoted misinformation before are going to promote misinformation about this."

Multiple independent reports have found that a small but well-followed group of misinformers are responsible for much of the anti-vaccine posts that Facebook users see.

Facebook took action to stop some of these accounts, but not all.

In November, NewsGuard published a report that listed "super-spreaders" of misinformation about COVID-19 vaccine development. Of the 14 English-language pages that the company cataloged, five are now gone from Facebook, Gregory said. (Facebook confirmed to PolitiFact that two of the pages, WorldTruth.tv and Energy Therapy, were removed for violating its policies against coronavirus vaccine misinformation.)

Among the NewsGuard super-spreaders that are still on the platform include the Truth About Cancer and Dr. Christiane Northrup, with each having hundreds of thousands of followers. The Truth About Cancer has published articles saying "the vaccine is the pandemic" and that the Pfizer vaccine is "untested and dangerous." Northrup, a Maine gynecologist, called coronavirus vaccines "experimental weapons against humanity" in a March 14 Facebook video.

"This thing was invented to get humanity injected," she said of COVID-19, just before telling her followers to watch a video that claims the vaccines cause autoimmune diseases and death. (They don’t.)

RELATED: Ask PolitiFact: How can COVID vaccines be safe when they were developed so fast?

On March 24, the Center for Countering Digital Hate, a nonprofit that has criticized technology companies’ misinformation policies, published its own report on vaccine misinformation super-spreaders. It found that "just 12 individuals and their organizations are responsible for the bulk of anti-vaxx content shared or posted on Facebook and Twitter." The list includes Robert F. Kennedy Jr., Joseph Mercola, and Ty and Charlene Bollinger, the couple that runs the Truth About Cancer.

During the House hearing on disinformation and extremism, Rep. Mike Doyle, D-Pa., cited the report to criticize the way that tech platforms address misinformation about vaccines.

"If you think the vaccines work, why have your companies allowed accounts that repeatedly offend your vaccine misinformation policies to remain up?" Doyle asked. "You’re exposing tens of millions of users to this every day."

When asked if Facebook would remove the 12 super-spreaders, Zuckerberg demurred.

"Congressman, I would need to look at the, and have our team look at the exact examples to make sure they violate our policies. But we have a policy in place around this," he said.

Now more than ever, it’s important to sort fact from fiction. Please donate to support our mission.

Our Sources

Associated Press, "Biden stands by May timeline for vaccines for all US adults," March 3, 2021

Associated Press, "RFK Jr. kicked off Instagram for vaccine misinformation," Feb. 11, 2021

Axios, "Exclusive: YouTube removed 30,000 videos with COVID misinformation," March 11, 2021

The Center for Countering Digital Hate, "The Anti-Vaxx Industry," July 2020

The Center for Countering Digital Hate, "The Disinformation Dozen," March 2021

CrowdTangle, accessed March 17, 2021

Email interview with Facebook spokesperson, March 19, 2021

Email interview with Facebook spokesperson Kevin McAlister, March 17, 2021

Email interview with Facebook spokesperson Kevin McAlister, March 30, 2021

Email interview with Brian Southwell, senior director of science in the public sphere at RTI International, March 17, 2021

Email interview with Nat Gyenes, Digital Health Lab lead at Meedan, March 17, 2021

Facebook, "An Update on Our Work to Keep People Informed and Limit Misinformation About COVID-19," Feb. 8, 2021

Facebook, "Combating COVID-19 Misinformation Across Our Apps," March 25, 2020

Facebook, "COVID-19 and Vaccine Policy Updates & Protections"

Facebook, "How We’re Tackling Misinformation Across Our Apps," March 22, 2021

Facebook, "Keeping People Safe and Informed About the Coronavirus," Dec. 18, 2020

Facebook, "Mark Zuckerberg Announces Facebook’s Plans to Help Get People Vaccinated Against COVID-19," March 15, 2021

Facebook, "Preparing for Election Day," Oct. 7, 2020

Facebook, "Reaching Billions of People With COVID-19 Vaccine Information," Feb. 8, 2021

Facebook post from Earthley, March 13, 2021

Facebook post from Earthley, March 14, 2021

Facebook video from Dr. Christiane Northrup, March 14, 2021

Factcheck.org, "RFK Jr. Video Pushes Known Vaccine Misrepresentations," March 11, 2021

Federal Trade Commission, "RE: Unapproved and Misbranded Products Related to Coronavirus Disease 2019 (COVID-19)," April 9, 2020

Food and Drug Administration, COVID-19 Vaccines

Gallup, "Two-Thirds of Americans Not Satisfied With Vaccine Rollout," Feb. 10, 2021

Healthline, "Here’s When We May Have Enough COVID-19 Vaccine for Herd Immunity," March 1, 2021

House Energy and Commerce Committee, "Disinformation Nation: Social Media's Role in Promoting Extremism and Misinformation," March 25, 2021

Interview with John Gregory, deputy health editor at NewsGuard, March 17, 2021

Interview with Kendrick McDonald, senior analyst at NewsGuard, March 17, 2021

Interview with Kolina Koltai, a postdoctoral fellow at the University of Washington’s Center for the Informed Public, March 17, 2021

Mainer, "Dr. No," Nov. 9, 2020

NewsGuard, "Misinformation about development of a COVID-19 vaccine spreads widely on Facebook"

PBS Newshour, "How Pinterest beat back vaccine misinformation," Sept. 29, 2020

Pinterest, Community guidelines

PolitiFact, "A Nashville nurse did not develop Bell’s Palsy after receiving the COVID-19 vaccine," Dec. 30, 2020

PolitiFact, "Anti-vaccine video of fainting nurse lacks context," Dec. 21, 2020

PolitiFact, "COVID-19 vaccine does not cause death, autoimmune diseases," March 4, 2021

PolitiFact, "Deaths after vaccination don’t prove that COVID-19 vaccine is lethal," Feb. 16, 2021

PolitiFact, "Doctors administering COVID-19 vaccines aren’t guilty of war crimes," Feb. 11, 2021

PolitiFact, "Examining how science determines COVID-19's 'herd immunity threshold,’" Jan. 15, 2021

PolitiFact, "No, COVID-19 vaccines do not contain nanoparticles that will allow you to be tracked via 5G networks," March 12, 2021

PolitiFact, "Receiving COVID-19 vaccine does not enroll you in a government tracking system or medical experiment," Feb. 26, 2021

PolitiFact, "The coronavirus vaccine doesn’t cause Alzheimer’s, ALS," Feb. 26, 2021

PolitiFact, "The ‘shaking’ COVID-19 vaccine side-effect videos and what we know about them," Jan. 20, 2021

PolitiFact, "Vaccine misinformation: preparing for infodemic’s ‘second wave,’" Dec. 21, 2020

Statista, "Most popular social networks worldwide as of January 2021, ranked by number of active users," Feb. 9, 2021

The Truth About Cancer, Cancer Treatments

The Truth About Cancer, "Tennessee, Texas Among Guinea Pigs for New COVID Vaccine," Nov. 24, 2020

The Truth About Cancer, "The vaccine *IS* the pandemic: 80% of nuns vaccinated at Kentucky convent tested positive for coronavirus two days later," March 3, 2021

Twitter, "COVID-19: Our approach to misleading vaccine information," Dec. 16, 2020

Twitter, "Updates to our work on COVID-19 vaccine misinformation," March 1, 2021

The Washington Post, "Massive Facebook study on users’ doubt in vaccines finds a small group appears to play a big role in pushing the skepticism," March 14, 2021

Wired, "Facebook says it’s taking on Covid disinformation. So what’s all this?" March 10, 2021

YouTube, "COVID-19 medical misinformation policy"